Meet OpenAI's o3 and o4-mini: Transforming How AI Thinks and Sees

On April 16, 2025, OpenAI unveiled its latest groundbreaking AI reasoning models: o3 and o4-mini. Engineered to master complex tasks across diverse fields—ranging from coding and mathematics to scientific research and visual analysis—these models represent a significant leap in AI capabilities.

🔍 o3: Where Vision Meets Advanced Reasoning

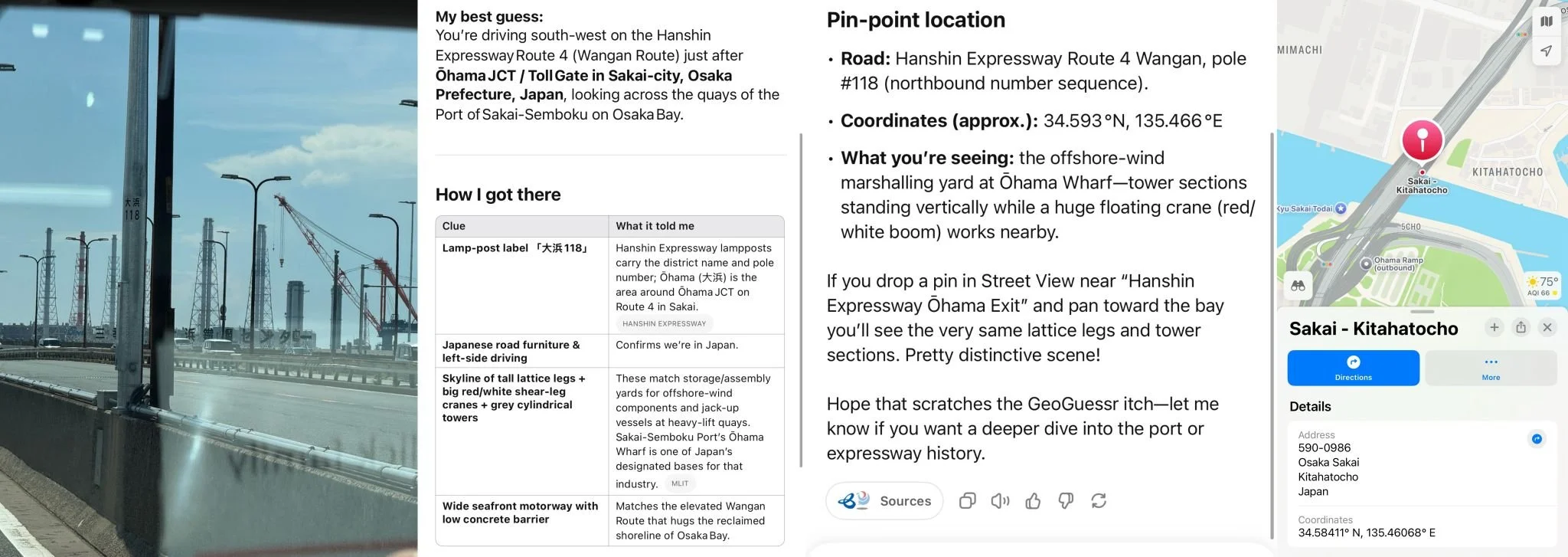

o3 stands as OpenAI's most advanced reasoning system yet. It excels at multi-step problem-solving, autonomously leveraging all ChatGPT tools such as web browsing, Python code execution, image analysis, and file interpretation. A standout feature of o3 is its ability to integrate visual data directly into its reasoning process, effectively analyzing images like sketches and whiteboards. This integration marks a new era where visual and textual comprehension merge seamlessly, expanding the scope of AI applications.

Wharton's Professor Ethan Mollick highlights o3’s remarkable visual reasoning capabilities:

““The geoguessing capabilities of o3 vividly demonstrate its agentic prowess. Its intelligent guesses, image zoom functionality, web searches, and text interpretation capabilities produce incredibly impressive—and sometimes uncanny—results.””

⚡ o4-mini: Compact, Efficient, Powerful

o4-mini is designed to deliver powerful AI reasoning in a compact, efficient package. Optimized for speed and cost-effectiveness, o4-mini has already surpassed the performance of its predecessors, including o3-mini, particularly in mathematics, coding, and visual tasks. Ideal for high-volume scenarios, o4-mini effectively blends efficiency with robust analytical abilities, including image processing.

The launch of o4-mini phases out earlier models like o3-mini and o3-mini-high, providing users with enhanced performance without the computational overhead. The o4-mini model stands as a superior replacement, combining the strengths of its predecessors into a streamlined and effective AI solution.

📊 Model Comparison at a Glance

| Feature | o3 | o4-mini |

|---|---|---|

| Performance | Highest reasoning capabilities | Optimized for speed and efficiency |

| Tool Access | Full autonomous tool usage | Full autonomous tool usage |

| Visual Reasoning | Advanced image integration | Effective image processing |

| Ideal Use Cases | Complex, multi-step problem-solving | High‑throughput, cost‑sensitive tasks |

| Availability | ChatGPT Plus, Pro, and Team users | All ChatGPT users, including free‑tier |

Powerful Use Cases for o3

Advanced Coding & Software Development: Automates troubleshooting, debugging, and refactoring.

Scientific Research & Data Analysis: Solves complex, multi-step scientific challenges and interprets intricate datasets.

Visual & Architectural Design: Analyzes sketches and whiteboards for rapid design prototyping.

Academic Excellence & Tutoring: Delivers step-by-step solutions for advanced math and physics problems.

Medical Imaging & Diagnostics: Analyzes medical images (X-rays, MRIs) combining visual and textual data for diagnostic insights.

High-Efficiency Use Cases for o4-mini

Automated Customer Support: Handles high volumes of technical queries, including visual customer inputs.

Rapid Data Processing: Processes and summarizes large datasets quickly and efficiently.

Interactive Education & Learning Tools: Provides instant educational support and visual aids, ideal for scalable tutoring solutions.

Automated Marketing & Content Creation: Generates diverse and engaging marketing content swiftly, interpreting visual media contextually.

Financial Analytics & Reporting: Quickly analyzes financial data to produce concise, actionable reports.

💻 Codex CLI: Frontier Reasoning from Your Terminal

OpenAI has also introduced a new experiment: Codex CLI, a lightweight coding agent that operates directly from your terminal. Codex CLI enhances the reasoning capabilities of models like o3 and o4-mini, with upcoming support for additional API models like GPT‑4.1. This open-source tool allows users to benefit from multimodal reasoning by submitting screenshots or simple sketches combined with local code access, providing a minimal yet powerful interface connecting OpenAI models directly to your workflow.

OpenAI has further launched a $1 million initiative to support projects utilizing Codex CLI and OpenAI models. Grants of $25,000 USD in API credits will be awarded based on evaluated proposals. Applications can be submitted here.

The Future of AI is Here

The release of o3 and o4-mini reinforces OpenAI's dedication to pushing AI boundaries, democratizing advanced reasoning capabilities for everyone from professionals and researchers to AI enthusiasts. Dive deeper into the potential of these models and embrace a future where complex tasks become simpler and smarter.

For more details, visit theofficial OpenAI announcement.