From Librarian to Super-Assistant: MCP vs. RAG in Plain English

Picture every AI tool in your stack as a fancy new gadget that insists on its own proprietary charging cable. The Model Context Protocol (MCP) is the industry’s plan to throw those spaghetti‑wires in the trash by giving large‑language‑model apps a universal “USB‑C port.” First open‑sourced by Anthropic in November 2024 and embraced by OpenAI’s Agents SDK in March 2025, MCP streams the live context—code commits, CRM tickets, ERP invoices—directly into your models so they answer with insider knowledge instead of generic guesses. In short: fewer hacks, richer answers, happier humans.

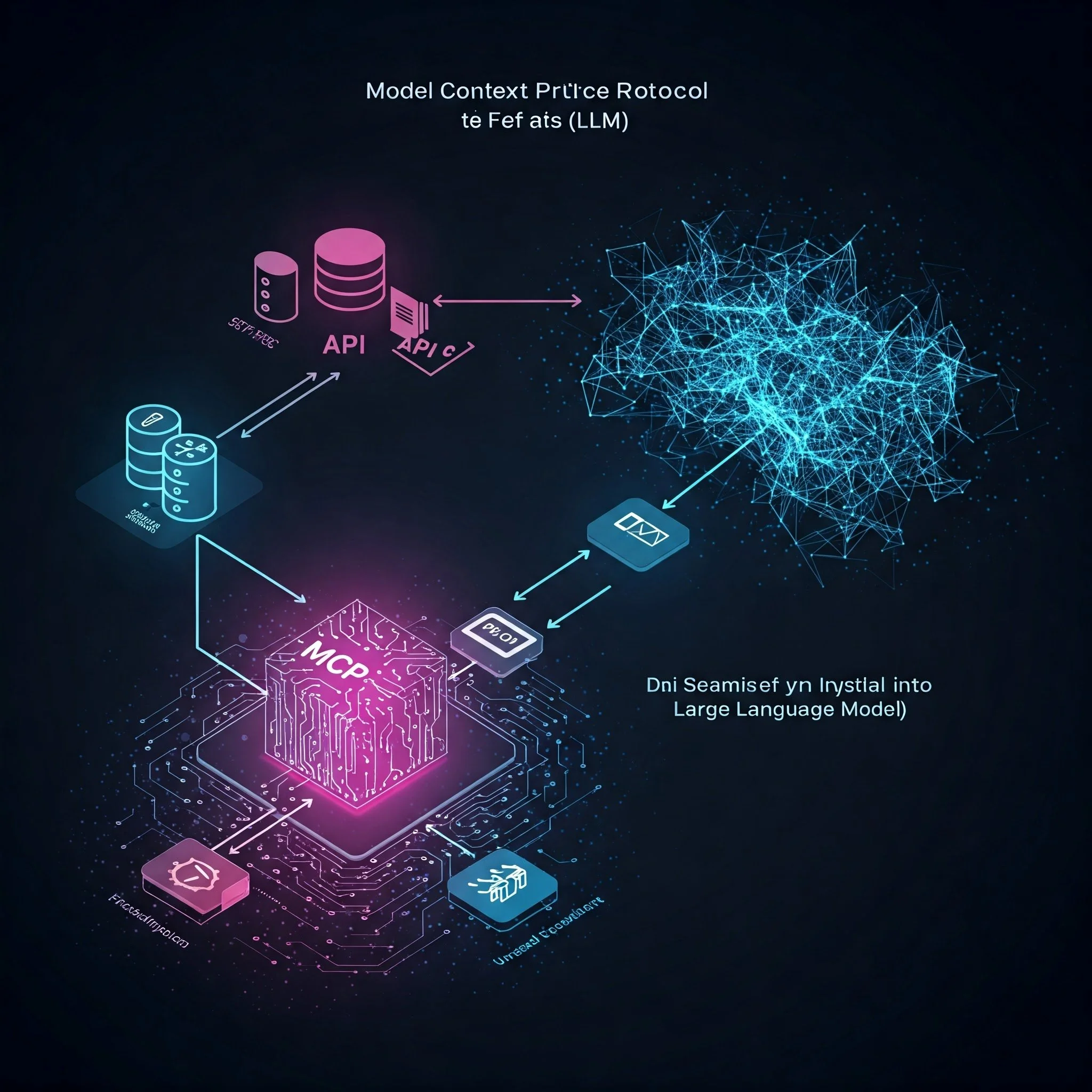

What is an MCP?

MCP is an open specification—think of two polite bouncers, one guarding your data and one living inside your model—that lets external tools and LLMs exchange structured JSON “context parcels” over a long‑lived, authenticated channel. Imagine the old‑school bank pneumatic tube: you pop a canister in, it goes to the right teller, and only they can open it. Same thing here, plus strict digital rules about who can send and open each parcel.

Key building blocks

MCP Server – lives next to your data/tools and exposes resources.

MCP Client – runs inside the model or agent runtime, subscribes to those resources.

Schemas & Scopes – shared JSON shapes, OAuth scopes guard access.

Timeline of adoption

Nov 2024 – Anthropic open‑sources MCP alongside Claude integrations.

Mar 2025 – OpenAI ships Agents SDK with native MCP hooks.

Spring 2025 – Community snowball: Sourcegraph’s Cody, Replit’s Code‑Review Agent, Block’s Finance Bots go live.

How It Works (In Non-Tech Speak)

Think of MCP like dining at a well‑run (and slightly quirky) restaurant:

First comes the handshake—your AI “customer” tells the host what it needs (a window seat, gluten‑free menu). The host (our MCP server) checks the reservation book and hands back a shiny little buzzer—aka the exact resources and a security token that proves you belong.

Once you’re seated, the conversation streams naturally: waiters keep refilling your glass and updating you on today’s specials instead of vanishing after one question. That’s continuous context in action—no more one‑shot requests.

Feel like dessert? You simply nudge the waiter. This is the action loop—the agent can send new orders ("add tiramisu, please"), the kitchen logs the change, and everyone stays in sync.

Hovering nearby is a sharp‑eyed maître d’. That’s security: OAuth scopes and audit trails ensuring no diner sneaks into the kitchen or steals the silverware. Bon appétit, data lovers!

🌟 Developer Reality Check: Under the tablecloth it’s still event streams and auth tokens, but you can happily describe it to your CFO as “a polite dinner party for our data.”

MCP vs. RAG

Wondering whether you need MCP, RAG—or both? Picture RAG as the quickest librarian on Earth: you ask a question, it sprints into the stacks, snags the perfect book, and reads out the relevant paragraph before your coffee cools. Fantastic for on‑demand facts, but that librarian never steps outside the library and certainly can’t file your expense report.

MCP, meanwhile, is like giving your AI a permanent office badge and a company Slack login. It gets a live feed of every new document, spreadsheet, or chat message as it appears, and it can send instructions or updates right back. RAG is pull‑based—snag info when asked—while MCP is push‑based and bidirectional: information flows in, actions flow out.

In practice, they’re best friends. RAG still fetches external knowledge (policy manuals, archived memos), and MCP layers on the inside scoop plus the authority to actually do something with it. Pair them up and you’ve got an AI that not only finds the recipe but also shops for ingredients and starts dinner.

Integration Styles Compared

ApproachWhat It DoesBiggest PainHow MCP HelpsWhere RAG FitsChatGPT PluginsWrap a human‑facing web UI around an API.User must click every time; not headless.Headless, code‑only pipeline.Mostly unnecessary.Function CallingPass one JSON payload per tool.Custom auth & one‑off specs.Shared schemas; persistent channel.Often nested inside RAG.LangChain Tool WrappersPython glue for each integration.Hard to reuse across projects.Standard protocol works anywhere.Common for retrieval step.Retrieval‑Augmented Generation (RAG)Fetch docs at query time, then generate.Latency, stale data, no write actions.MCP can stream live data & actions.Core retrieval engine.Model Context ProtocolBi‑directional stream of context and actions.New protocol to implement; OAuth complexity.“Integrate once, lighter forever.”Complements RAG, not a replacement.

Why Businesses Should Care

Imagine hiring an assistant who not only remembers every memo the second it lands but can also run down the hall to file paperwork while cracking jokes to keep morale high. That’s MCP in a nutshell:

With one MCP connection you plug in once and every compliant agent—OpenAI, Anthropic, or tomorrow’s open‑source wunderkind—can wade into your data lake without asking IT for another cable. Because the context flows continuously, your AI sidekicks dish out answers using today’s Git commit or this morning’s customer‑support ticket instead of rummaging through week‑old leftovers. And unlike RAG—which is basically a polite librarian who only whispers facts—MCP’s agents can roll up their sleeves and do stuff: merge pull requests, auto‑file expenses, even babysit your monthly invoices. Top it off with the fact that the industry’s big kids are backing the standard, and you’ve got less chance of getting shackled to some vendor’s secret sauce.

Challenges

Even the best universal adapters have a few prongs that can zap you, and MCP is no different. First up is OAuth & secrets management: misplace a token and you might wake up to a robot redecorating your databases. Next comes rate‑limit whiplash—continuous data streams can leave older SaaS tools panting like dial‑up modems. Then there’s the versioning & governance juggling act; every tweak to a schema spawns a fresh white paper and someone muttering about backward compatibility. And finally, brace for the organizational workout: shifting from those crusty one‑off webhooks to a sleek event‑driven flow is like trading your fax machine for Slack—totally worth it, but expect a few “where does the paper go?” moments along the way.

Conclusion

The Model Context Protocol isn’t here to murder RAG—it’s here to deliver the content RAG can’t and empower agents to do things, not just say things. If your LLM workflows feel like they’re held together by duct‑taped webhooks and hope, it may be time to give your bot a proper docking station. Grab Anthropic’s spec, fire up OpenAI’s Agents SDK quick‑start, and let the context (and the productivity) flow.